Featured Projects

Enabling Automatic Graph Learning Pipelines with Limited Human Knowledge

ARC Future Fellowship (2022-2026)

High-end GPU server for AI research - Nvidia DGX A100

Griffith University Research Infrastructure Program (GURIP) (2024)

Implementation, process evaluation and cost effectiveness of the Australian Tommy’s App - A digital clinical decision tool to improve maternal and perinatal outcomes

MRFF - High-Cost Gene Treatments and Digital Health Interventions (2023-2025)

National data infrastructure to inform treatment in cerebral palsy

MRFF - Research Data Infrastructure Grant (2023-2026)

Temporal Graph Mining for Anomaly Detection.

ARC Discovery Project (2024-2026)

Towards Interpretable and Responsible Graph Modeling for Dynamic Systems

CSIRO-NSF Responsible AI Grant (2023-2026)

Unmask HIV latency through disruption of HIV synapses

NHMRC Ideas Grant (2023-2026)

Effective Multi-Task Self-Supervised Learning for Graph Anomaly Detection

Amazon Research Grant Success (2022)

Featured Publications

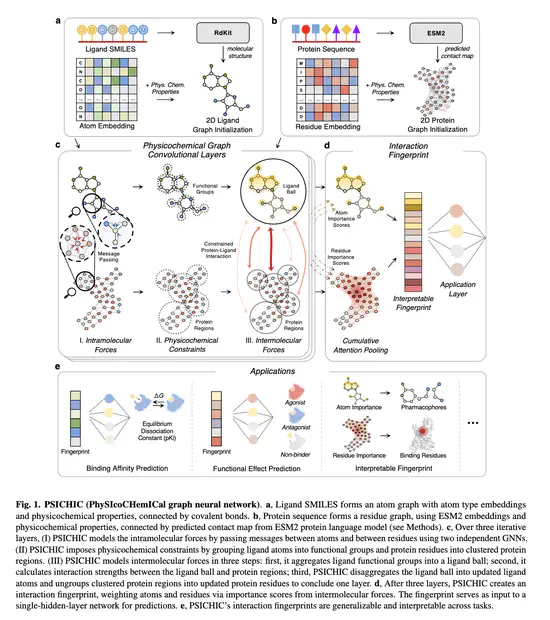

PSICHIC: physicochemical graph neural network for learning protein-ligand interaction fingerprints from sequence data

[Nature Machine Intelligence, 2024] This paper introduces PSICHIC, a graph neural network framework that leverages physicochemical constraints to predict protein-ligand interactions directly from sequence data. PSICHIC achieves state-of-the-art accuracy in binding affinity prediction, even surpassing existing structure-based methods in certain cases. Furthermore, its interpretable fingerprints illuminate the specific protein residues and ligand atoms involved in these interactions, offering a promising tool for virtual screening and enhancing our understanding of protein-ligand mechanisms.

Trustworthy Graph Neural Networks: Aspects, Methods and Trends

[PIEEE, 2024] This paper discusses the growing relevance of graph neural networks (GNNs) across various real-world applications, from recommendation systems to drug discovery, emphasizing the need for trustworthy GNNs beyond task performance. The survey proposes a comprehensive roadmap for building such GNNs, addressing six key aspects: robustness, explainability, privacy, fairness, accountability, and environmental well-being. Additionally, it highlights the interrelations among these aspects and presents future directions for advancing trustworthy GNN research and its industrial applications.

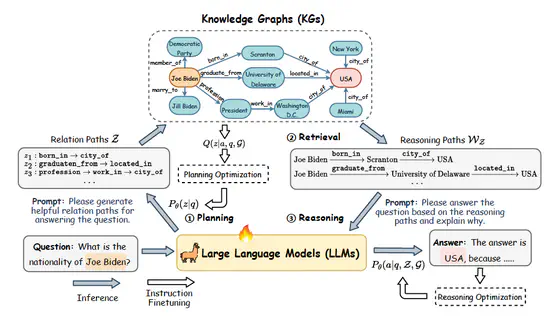

Reasoning on Graphs: Faithful and Interpretable Large Language Model Reasoning

[ICLR-2024] In this paper, we introduce Reasoning on Graphs (RoG), a novel method that enhances Large Language Models (LLMs) with Knowledge Graphs (KGs) to address their limitations in up-to-date knowledge and reasoning hallucinations, by utilizing KGs for faithful and interpretable reasoning. RoG employs a planning-retrieval-reasoning framework to generate relation paths from KGs, enabling LLMs to perform more accurate reasoning, and has shown state-of-the-art performance on benchmark KG reasoning tasks.

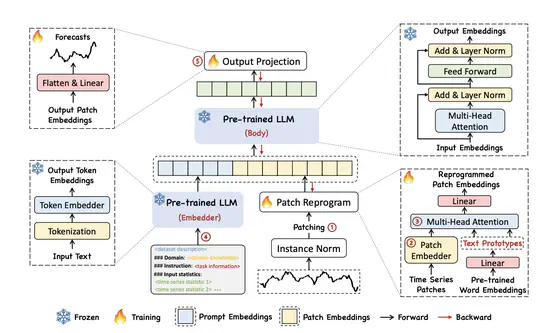

Time-LLM: Time Series Forecasting by Reprogramming Large Language Models

[ICLR-2024] This work introduces Time-LLM, a novel reprogramming framework that adapts Large Language Models (LLMs) for general time series forecasting, overcoming the challenges of data sparsity and modality alignment between time series and natural language. By reprogramming time series data with text prototypes and employing the Prompt-as-Prefix (PaP) technique for enriched input context, Time-LLM demonstrates superior forecasting performance, outshining specialized models in both few-shot and zero-shot learning scenarios.

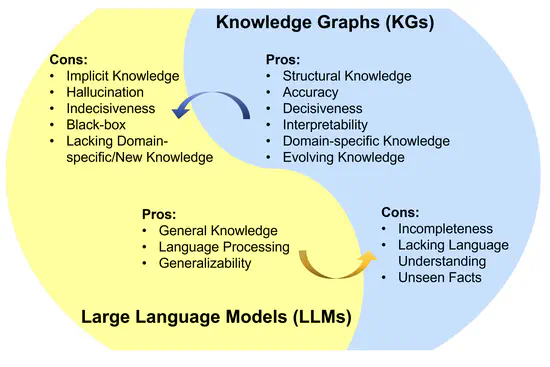

Unifying Large Language Models and Knowledge Graphs: A Roadmap

[TKDE-2024] This article introduces a roadmap for integrating Large Language Models (LLMs) like ChatGPT and GPT4 with Knowledge Graphs (KGs) to leverage their complementary strengths in natural language processing and artificial intelligence. It outlines three frameworks for this unification: KG-enhanced LLMs, LLM-augmented KGs, and a synergistic approach, aiming to improve both factual knowledge access and interpretability while addressing the challenges of KG construction and evolution.