Vision

The TrustAGI Lab at Griffith University envisions itself as a pioneering force in the realm of Trustworthy Artificial General Intelligence (AGI), committed to:

- Advancing AGI Research: The lab aspires to propel the field of AGI forward by spearheading the development of novel AI algorithms. Our goal is to endow machines with human-level intelligence, contributing significantly to the evolution of AI capabilities.

- Ensuring Trustworthiness and Transparency: Recognizing the critical importance of trust and transparency in AI technologies, the TrustAGI Lab is dedicated to providing practical solutions. Addressing key challenges associated with the deployment of AGI, our focus encompasses explainability, safety, robustness, fairness, and privacy. By pioneering advancements in these domains, we aim to establish AGI systems that are not only powerful but also ethical and accountable.

Research Areas

AGI Research

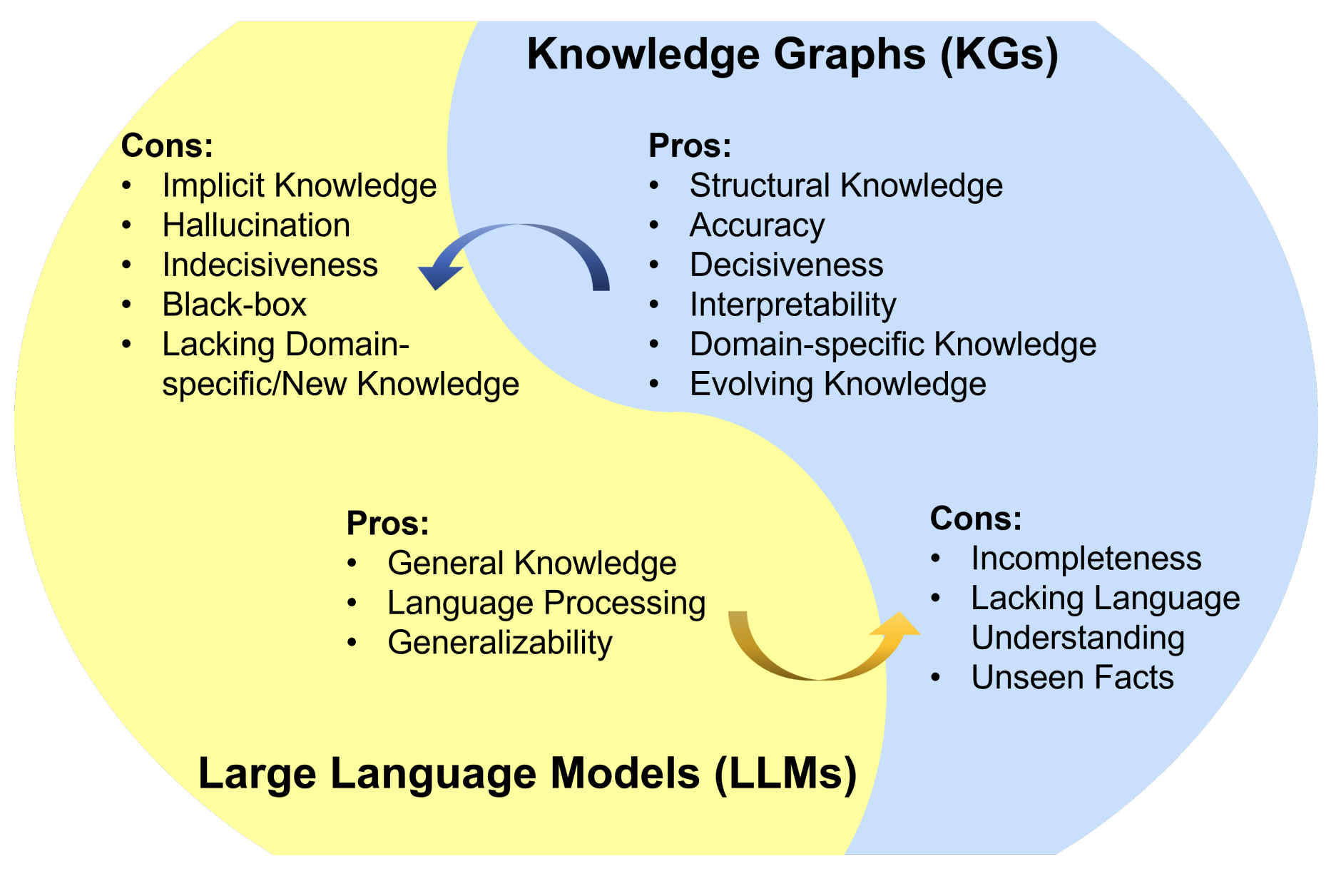

Large Language Models:

Exploring the capabilities and applications of large language models, this research area delves into advancing natural language processing and understanding, contributing to the development of sophisticated language-based AI systems.

Graph Machine Learning:

Investigating the intersection of machine learning and graph theory, this research theme aims to enhance AI models’ ability to analyse and interpret complex relationships, fostering advancements in various domains such as social networks, biology, and recommendation systems.

Knowledge Representation and Reasoning:

Focused on the fundamental aspects of AI, this area aims to develop robust frameworks for representing and reasoning with knowledge, contributing to the creation of more intelligent and context-aware AI systems.

Time Series Analysis:

Addressing the temporal dimension in data, this research theme explores techniques for effective analysis and prediction of time series data, crucial for applications in finance, healthcare, and other dynamic domains.

Recommender Systems:

Centred on enhancing user experiences, this research area focuses on refining and advancing recommender systems, contributing to personalized and effective content recommendations in various applications.

Research Areas

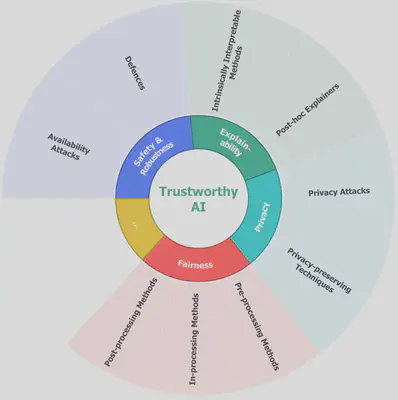

Trustworthy AI Research

Explainability:

Investigating methods for developing interpretable and explainable AI models, this research area emphasizes transparency in decision-making processes. The focus includes explainable machine learning models, visualization techniques, and interpretable deep learning algorithms, applied particularly in healthcare, finance, and social sciences.

Safety and Robustness:

Addressing risks and vulnerabilities in AI technologies, this research theme explores techniques to ensure the safety, resilience, and robustness of AI systems. Areas of focus include adversarial machine learning, robust optimization, attacks and defense, and secure AI deployment in real-world environments.

Fairness:

Focused on mitigating biases and discriminatory outcomes in AI decision-making, this area investigates fairness-aware machine learning, bias detection and mitigation, and ethical considerations in AI development. The lab also aims to provide practical guidelines and tools for practitioners to promote fairness in AI applications.

Privacy:

Researching privacy attacks and privacy-preserving AI techniques, this theme addresses the critical concern of protecting sensitive data and individuals’ privacy rights. Topics include federated learning, secure multi-party computation, and privacy-enhancing technologies for AI applications in healthcare, finance, and social media.